Co je vlastně

cukrová vata?

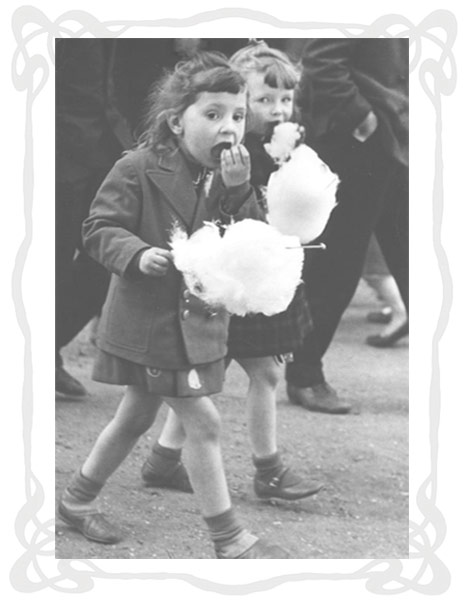

Cukrová vata je zvláštní druh cukrové sladkosti, která je tvořena typickou lehkou, vzdušnou hmotou o různé barvě v závislosti na použitém barvivu. Vyrábí se v mnoha lahodných příchutích. Vzhledově i na dotyk je podobná vatě.

Principem výroby je roztavení krystalického cukru na karamel, který je pak odstředivou silou vháněn do bubnu stroje, ve kterém se ochlazuje a zpátky cukernatí. Takto vzniklá slabá vlákna se pak namotávají na dřevěnou špejli nebo papírový kornout a podávají ke konzumaci.

O nás

Chystáte se pořádat Váš velký den?

Jsme ta správná volba pro realizaci Vaší akce. Naše společnost není jen o cukrové vatě, nabízíme také kompletní zajištění akcí.

Zajistíme vystoupení maskotů, animační programy, kouzelníky, ozvučení, nůžkové party stany, pronájem podií, nafukovacích a zábavních atrakcí, balónkové dekorace, kompletní organizační servis.

Zaručujeme 100% přístup, spolehlivost a kreativitu.

Neustále rozšiřujeme portfolio našich aktivit. Jsme přímí vlastníci všech nabízených produkcí.

Každoročně realizujeme akce pro firemní akce, večírky, klientské party, dětské dny, obchodní centra, reklamu a film, soukromé oslavy.

Agenturám garantujeme diskrétnost při akcích, které realizujete pro Vaše klienty. Všechny uvedené produkce jsou určeny na pronájem v České a Slovenské republice.

V případě, že Vás naše nabídka zaujala a máte zájem prohlédnout si veškeré, námi nabízené služby, navštivte níže uvedený odkaz.

Děkujeme za čas, který jste věnovali naší nabídce, a věříme,

že si z naší nabídky vyberete. Těšíme se na spolupráci s Vámi.

Více informací na: zabavniservis365.cz

Pronájem

Chystáte se pořádat Váš velký den?

Zajistíme výrobu cukrové vaty také bez obsluhy a pronájmu stroje. Jednotlivé porce jsou baleny dle přání zákazníka a určené pro další prodej. Všechny naše produkty jsou vyrobeny z těch nejlepších surovin, bezpečné a čerstvé.

V nabídce pro Vás máme profesionální stroje na výrobu cukrové vaty s výkonem až 180 vat za hodinu, včetně vyškolené a usměvavé obsluhy. Vatu podáváme na papírový americký kornout, který je možné opatřit reklamním potiskem. Pozvat si nás můžete na kulturní a společenské akce jako jsou

- dětské dny, slavnosti a narozeninové oslavy

- firemní akce, volební kampaně

- dny otevřených dveří

- výstavy a veletrhy

- sportovní utkání

Cukrovou vatu pro Vás vyrobíme v široké paletě

barev a příchutí:

banánová, citrónová, jahodová, jablečná, kokosová, melounová, ostružinová, pomerančová, třešňová, vanilková..

Druhy pronájmu:

Promo – Nabídka obsahuje předem pevně domluvenou hodinovou sazbu za neomezený počet cukrových vat na Vaší akci, částku hradí objednavatel, cukrové vaty jsou rozdávány zdarma formou cateringu.

Akce – Pořádáte-li akci, kde by neměl chybět stánek s cukrovou vatou, obraťte se na nás s domluvením účasti na Vaši akci ke vzájemné spokojenosti.

Soukromé oslavy – Pořádáte svůj velký den a chcete být originální? Využijte naší půjčovnu a vyrobte si tolik cukrové vaty, kolik ji jen dokážete sníst. Informujte se o podmínkách zapůjčení stroje na výrobu cukrové vaty.

Balená vata – do firmy, do obchůdku, jako dárek nebo jen tak na mlsání domů? Žádný problém, žádný limitovaný počet, balenou vatu zasíláme i kurýrem.

Kontakt

Pro nejrychlejší kontakt volejte na +420 604 600 603

Kontaktní informace

David Jůna

Šífařská 568/18,

147 00, Praha 4,

Česká republika

+420 604 600 603

info@zabavniservis365.cz

č. ú. 176996868/0600, vedený u MONETA Money bank.

IČ: 717 87 615

DIČ: CZ7701080046

Fyzická osoba zapsaná v Živnostenském rejstříku od 6.2.2006

Evidenční číslo ŽL: 310011-24397561

Napište nám